AI – threat or opportunity?

In a new report on artificial intelligence for worker management, the European Agency for Safety and Health at Work warns against what could happen if the technology is misapplied in workplaces. At the same time, AI is considered crucial for digital green change both in the Nordics and the Baltics.

There is still no commonly accepted definition of what AI is. Researchers’ philosophical discussions about what constitutes intelligence have been going on for decades. Can computers really think? In working life we can skip the philosophy, however, and use a simpler definition of AI:

AI is to programme computers to make decisions that humans used to make.

AI systems being constructed now are gathering information, often in real-time, about employees, the work they carry out and which digital tools they use. The information is then fed into AI-based models that make automated or semi-automated decisions or provide management with information which helps them make decisions about the labour force.

Reducing risk

The report from the health and safety agency says AI can be used to minimise the risk of burnout or to prevent accidents. A truck driver can for instance be monitored continuously to see how often they blink, yawn or look tired. The driver gets a warning when the risk of falling asleep at the wheel is considered to be big, or the truck will stop by itself and park where it is safe.

Warning a worker who has forgotten to bring safety equipment to a high-up job sounds reasonable, but some of the AI tools mentioned in the report – which are considered to possibly have a positive effect – still create some unease.

"Additionally, AIWM systems (AI-based worker management) that can listen in on workers talking and that are able to analyse this information can identify and detect cases of bullying or sexual harassment,” the report says.

In the same way, AIWM can analyse emails or other text. A research report describes an AI system that can analyse the relationship between certain personality traits and potential online sexual harassment behaviour.

Negative consequences

The negative consequences of AI might never have been intended, but can arise because the employee can no longer control the tempo and fashion in which a task should be completed. Some of the dangers highlighted in the report include:

- Increased work intensity.

- Workers losing control over their jobs.

- Workers are forced to behave like machines, or the “datafication” of workers – treating workers as collections of digital data.

- The border between work and private life gets blurry.

- Personal integrity is threatened when for instance information about toilet breaks is gathered.

- Customers can “punish” workers by giving them service performance points.

- AI systems make workers less motivated and work appears meaningless.

- Workers can be isolated and feel lonely since most communication happens through a computer and not with colleagues.

- Trade unions are weakened as a consequence.

- A lack of transparency about why decisions are made.

- AI systems do not work and put workers in dangerous situations.

We are still talking about AI systems which are developed and introduced with the aim of improving workflow and helping both workers and companies. This is, however, not always the case.

Anti-union mapping

“Trade unions’ efforts to organise labour has been a central goal for predictive technology surveying and categorising social media. For instance, such technology has been used to anticipate labour unrest in Walmart stores,” says Gabriel Grill, a researcher at the University of Michigan, who has published several reports about how AI is used to map and thwart legitimate political protest.

Gabriel Grill, who does research on how AI is used to suppress trade union work. Photo: Björn Lindahl

He points to the fact that a company like the Pinkerton detective agency has been hired by Amazon to fight attempts to unionise workers. Google, Apple and Facebook have denied using Pinkerton, but have hired people you used to work for the agency.

Earlier this year, the company Clearview AI was fined 10 million dollars by the UK’s Information Commissioner's Office. The company specialises in facial recognition and one of its customers is the UK police force. The company’s databases contain 22 billion images of people, gathered from social media. The company was also told to delete all images of British citizens.

Security services’ focus has changed from gathering information about enemy countries for analysis to trying to predict whether their own country’s citizens are planning terror attacks. The Norwegian Police Security Service recently asked for easier access to information from psychiatric and health institutions in order to identify people who are both radicalised and mentally unstable.

Another issue is how AI will affect cyber security. IBM’s annual report on data breaches shows that 83 % of the 550 companies across 17 countries that they surveyed had suffered more than one data breach. The average cost of a data breach was 4.3 million dollars. Health companies have seen the fastest growth in cost per data breach – between 2020 to 2022 it increased by 41.6 % and the average cost was 10 million dollars.

How do you fight hackers?

But what is the best way of fighting increasingly sophisticated cyber-attacks?

“More openness,” answered Kristjan Järvan, Estonia’s Minister of Entrepreneurship and Information Technology, at the Digital North conference held in Oslo on 7 September. The conference is part of the Nordic-Baltic cooperation on digitalisation.

Kristjan Järvan, Estonia’s Minister of Entrepreneurship and Information Technology.

Kristjan Järvan, Estonia’s Minister of Entrepreneurship and Information Technology.

“Estonia is now the last country to be the victim of new virus attacks because the hackers know that we will immediately share information with other countries.”

Estonia was the victim of a massive cyber-attack in 2007 in relation to the demolition of a Russian war monument.

“We took a real hit to the face and some of our digital services that our citizens had become used to had to be shut down. But we have learned from what happened and work night and day to improve our security. When Nato established a cyber defence centre of excellence in 2014, it chose Tallinn.”

Its own digital embassy

Estonia has even established its own “digital embassy” in Luxembourg where the country’s most critical and secret information is held in case Estonia were to be occupied.

Estonia has also helped Ukraine by sharing information about how the country should defend itself against cyber-attacks.

“Digitalisation is happening very fast in Ukraine now because the government can make decisions it would not be able to make in peacetime. Citizens of Ukraine can now access 160 public services through their mobile phones,” says Kristjan Järvan.

This year’s Digital North conference focused on how the Nordic and Baltic countries should share more information, especially when it comes to the management of the Baltic Sea.

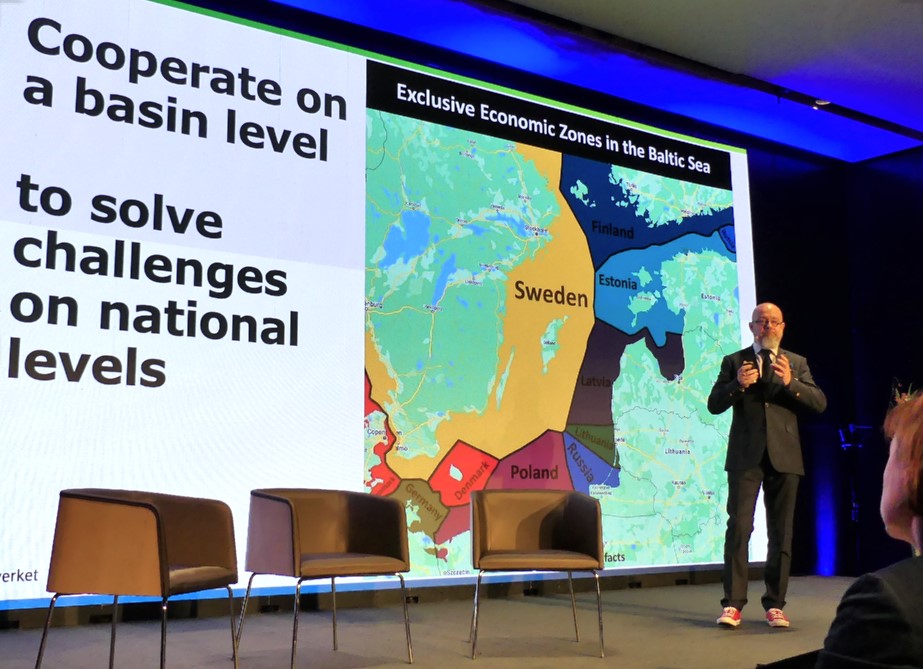

“It is not possible to have a sensible administration of fisheries and other resources when the Baltic Sea is divided in this way, if not all the countries share their information,” said Njål Tengs-Hagir, head of marine infrastructure at the Norwegian Mapping Authority, and used a map to show how divided the Baltic Sea is.

Njål Tengs-Hagir with a map of the Baltic Sea, divided between nine coastal states: Denmark, Sweden, Germany, Poland, Estonia, Latvia, Lithuania, Russia and Finland (with Åland as an autonomous area). Photo: Björn Lindahl

But how do you solve the challenge with more open information, where for instance researchers and civil servants could gather information from databases in a neighbouring country while protecting yourself from data breaches and virus attacks? The answer lies in the architecture itself, said several of the speakers.

“If we cooperate on the construction of a secure system for sharing information across national borders, a system that solves the technical issues about how this can be done securely while taking into account the legal aspects and how to regulate the system, we open up for enormous opportunities,” said Kristjan Järvan.

X-Road for digital information

Estonia already has a system, X-Road, which is used to share information within the country. It is described as Estonia’s digital spine. In order to secure all information sharing, all outgoing data is given a digital signature while also being encrypted. All incoming data is authenticated and logged.

The European Agency for Safety and Health at Work ends its report with several recommendations, but the most important one is that AI-based worker management systems must be designed, implemented and handled in a way that makes them secure and transparent. Workers must be consulted and have access to the same information as the developers on all levels so that humans and not machines are in control at all times.

- Digital security discussion

-

A panel debate at the Digital North conference on how digitalisation can also be green and sustainable. From left, above: Morten Dalsmo, Sintef Digital; Kristjan Järvan, Estonia’s Minister of Digitalisation; Siri Lill Mannes, the Norwegian Prime Minister’s Office and Artürs Toms Plešs, Latvia’s Minster of Environmental Protection.

Follow us on Facebook

Follow us on Facebook